Docker安装Hadoop、Hbase、Zookeeper、Phoenix伪集群

准备条件:

- 安装docker

- docker容器配置内存8G及以上

- shell命令行或者Mac控制台

- 安装Kitematic

Hadoop /Hbase/Zookeeper/phoenix安装环境已经集成在mysaber/hadoop:0.2.2镜像中,简单配置即可。

拉取docker镜像

$ docker pull mysaber/hadoop:0.2.2

使用命令查看docker镜像

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

mysaber/hadoop 0.2.2 89e80d91b1a5 3 days ago 4.14GB

出现对应镜像表示拉取成功。

配置对应的启动脚本文件“start-container.sh”

#!/bin/bash

# the default node number is 3

N=${1:-3}

# start hadoop master container docker rm -f master &> /dev/null

echo "start hadoopmaster container..."

docker run -itd \

#-v后两个参数,冒号前,为本地路径,后面用${localPath}代替,冒号后为容器路径,之后用${serverPath}代替

-v ${localPath}:/opt/docker \

--net=hadoop \

-p 50070:50070 \

-p 2181:2181 \

-p 8088:8088 \

-p 8765:8765 \

-p 60010:60010 \

-p 16020:16020 \

-p 16000:16000 \

-e ZOO_MY_ID=1 \

--name master \

--hostname master \

mysaber/hadoop:0.2.2 &> /dev/null

# start hadoop slave container

i=1

while [ $i -lt $N ]

do

docker rm -f slave$i &> /dev/null

echo "start hadoop-slave$i container..."

docker run -itd \

-v ${localPath}:/opt/docker \

--net=hadoop \

-p $((2181+$i)):2181 \

--name slave$i \

--hostname slave$i \

-e ZOO_MY_ID=$(($i+1)) \

mysaber/hadoop:0.2.2 &> /dev/null

i=$(( $i + 1 ))

done

# get into hadoop master container

#docker exec -it master bash

脚本将会启动三个容器,一个master、两个slave(slave1、slave2)

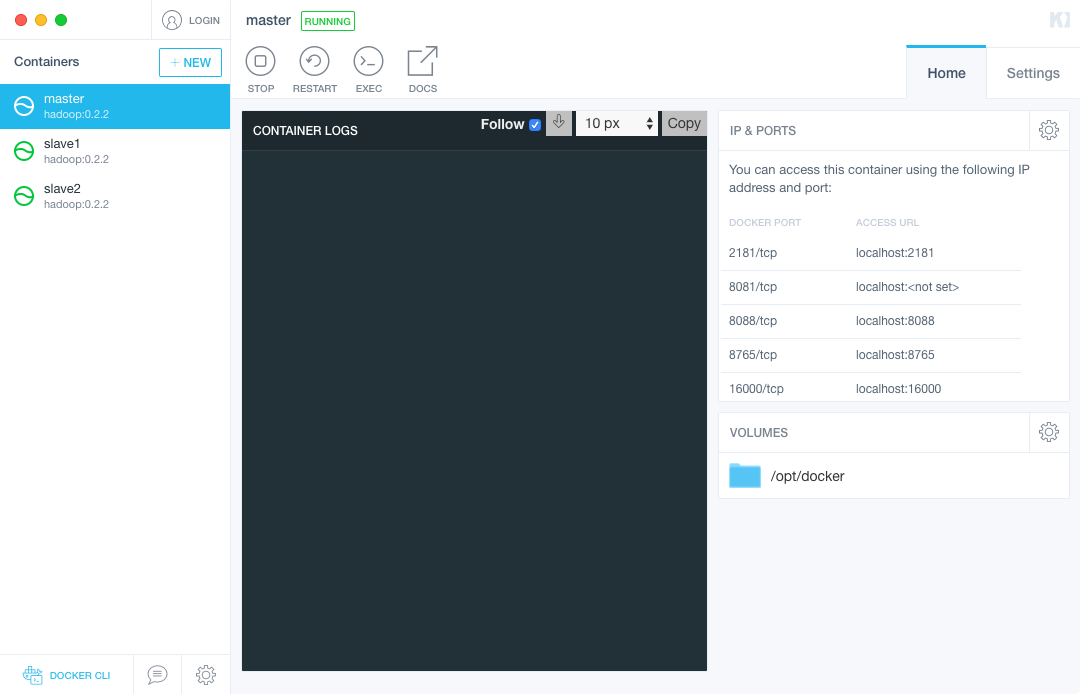

使用Kitematic查看已经启动的镜像容器,选中master。点击exec可以进入容器内部。

如果没有安装Kitematic,则可以使用下面命令登陆容器内部:

$ docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 2c6e954fd7a0 mysaber/hadoop:0.2.2 "/bin/bash" 37 minutes ago Up 37 minutes 8081/tcp, 8088/tcp, 8765/tcp, 50070/tcp, 60010/tcp, 0.0.0.0:2183->2181/tcp slave2 88deb95f7195 mysaber/hadoop:0.2.2 "/bin/bash" 37 minutes ago Up 37 minutes 8081/tcp, 8088/tcp, 8765/tcp, 50070/tcp, 60010/tcp, 0.0.0.0:2182->2181/tcp slave1 cd06942e2c58 mysaber/hadoop:0.2.2 "/bin/bash" 37 minutes ago Up 37 minutes 0.0.0.0:2181->2181/tcp, 0.0.0.0:8088->8088/tcp, 0.0.0.0:8765->8765/tcp, 0.0.0.0:16000->16000/tcp, 0.0.0.0:16020->16020/tcp, 0.0.0.0:50070->50070/tcp, 0.0.0.0:60010->60010/tcp, 8081/tcp master查看容器对应的container id

$ docker exec -it cd06942e2c58 /bin/bash [root@master /]#出现以上结果表明正确进入容器内部。

在master容器中,修改hbase配置文件:

[root@master /]# vim /opt/hbase/conf/hbase-site.xml

在其中加入下面配置:(在标签<configuration></configuration>中,与其他property同级)

<property>

<name>fs.default.name</name>

<value>hdfs://master:9000</value>

</property>

在${localPath}新建编辑脚本文件start-all.sh:(或在/opt/docker目录下执行 vim start-all.sh)

#!/bin/bash

echo "开始启动master。。。"

sh /opt/hadoop/sbin/stop-all.sh

wait

sh /opt/hadoop/bin/hadoop namenode -format

wait

sh /opt/hadoop/sbin/start-all.sh

wait

sh /opt/hadoop/bin/hdfs dfsadmin -safemode leave

wait

sh /opt/zookeeper/bin/zkServer.sh start

wait

echo "master的hadoop和zookeeper启动完成!"

ssh root@slave1 > /dev/null 2>&1 << eeooff

echo "2" > "/opt/zookeeper/zkdata/myid"

cat /opt/zookeeper/zkdata/myid

wait

sh /opt/zookeeper/bin/zkServer.sh start

wait

exit

eeooff

echo "slave1的zookeeper启动完成!"

ssh root@slave2 > /dev/null 2>&1 << eeooffw

echo "3" > "/opt/zookeeper/zkdata/myid"

cat /opt/zookeeper/zkdata/myid

wait

sh /opt/zookeeper/bin/zkServer.sh start

wait

exit

eeooffw

echo "slave2的zookeeper启动完成!"

sh /opt/hbase/bin/start-hbase.sh

wait

echo "hbase启动完成!"

sh /opt/hbase/bin/hbase shell

切换到master容器,执行命令:

sh-4.2# cd /opt/docker/

sh-4.2# sh start-all.sh

...

...

hbase启动完成!

2019-10-24 01:13:29,343 WARN [main] util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

HBase Shell

Use "help" to get list of supported commands.

Use "exit" to quit this interactive shell.

Version 2.0.0, r7483b111e4da77adbfc8062b3b22cbe7c2cb91c1, Sun Apr 22 20:26:55 PDT 2018

Took 0.0032 seconds

hbase(main):001:0>

出现以上结果,表示hadoop、zookeeper、hbase集群已经启动完成。可以再hbase中进行验证:

hbase(main):001:0> list

TABLE

0 row(s)

Took 0.6531 seconds

=> []

hbase(main):002:0> create 'user','info'

Created table user

Took 0.9004 seconds

=> Hbase::Table - user

成功执行以上两条命令,表明hbase的查询和创建表都是正常的,如果出现问题的话,可以查看/opt/hbase/logs/中对应的log文件抛出的异常来进行排查。

从服务列表中排查是否出错:在master中通过命令查询当前容器启动的服务列表:

## 执行jps命令 sh-4.2# jps 896 SecondaryNameNode————hadoop的secondaryNameNode服务 1507 HMaster————hbase的Hmaster服务 1379 QuorumPeerMain————zookeeper服务 1062 ResourceManager————hadoop的ResourceManager服务 1611 HRegionServer————hbase的HRegionServer服务 652 NameNode————hadoop的NameNode服务 2094 Jps如果上述6个服务都启动成功,表明master中的服务正常。切换到slave中继续查看

## 切换到slave1容器,可同理切换到slave2容器 sh-4.2# ssh slave1 Last login: Tue Oct 8 09:22:02 2019 from master ## 执行jps命令 [root@slave1 ~]# jps 480 HRegionServer————hbase的HRegionServer服务 130 DataNode————hadoop的DataNode服务 402 QuorumPeerMain————zookeeper服务 245 NodeManager————hadoop的NodeManager服务 856 Jpsslave1和slave2中的服务应该相同,如果出现缺少某服务的情况,可在对应容器中,cd到对应服务的目录下/opt/hadoop|hbase|zookeeper的logs目录中查看对应服务的启动运行日志。

配置Phoenix

切换到master容器,进入phoenix目录:

[root@slave1 ~]# ssh master

Last login: Tue Oct 8 09:22:02 2019 from master

[root@master ~]# cd /opt/phoenix/bin/

[root@master bin]#

启动phoenix的queryserver:

[root@master bin]# ./queryserver.py start

starting Query Server, logging to /tmp/phoenix/phoenix-root-queryserver.log

## 检查queryserver是否已经启动

[root@master bin]# jps

896 SecondaryNameNode

2624 Jps

1507 HMaster

1379 QuorumPeerMain

2596 QueryServer————phoenix的queryserver服务

1062 ResourceManager

1611 HRegionServer

652 NameNode

phoenix命令行

phoenix也提供了一个类似于MySQL的命令行的工具,在phoenix的bin目录下执行:

[root@master bin]# ./sqlline.py

Setting property: [incremental, false]

Setting property: [isolation, TRANSACTION_READ_COMMITTED]

issuing: !connect jdbc:phoenix: none none org.apache.phoenix.jdbc.PhoenixDriver

Connecting to jdbc:phoenix:

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/opt/phoenix/phoenix-5.0.0-HBase-2.0-client.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/opt/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

19/10/24 02:31:53 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Connected to: Phoenix (version 5.0)

Driver: PhoenixEmbeddedDriver (version 5.0)

Autocommit status: true

Transaction isolation: TRANSACTION_READ_COMMITTED

Building list of tables and columns for tab-completion (set fastconnect to true to skip)...

133/133 (100%) Done

Done

sqlline version 1.2.0

0: jdbc:phoenix:>

出现上述结果表示成功进入phoenix,可以尝试执行创建表格(创建表格注意事项,在下方有提及)

0: jdbc:phoenix:> create table visit_details (

inc_id varchar(32) not null,

area_code varchar(32) ,

area_name varchar(32) ,

department_code varchar(32) ,

department_name varchar(32) ,

visit_type tinyint default 0 ,

patient_code varchar(32) not null ,

address varchar(128) ,

address_split varchar(128) ,

address_province varchar(32) ,

address_city varchar(32) ,

address_district varchar(32) ,

address_town varchar(32) ,

address_street varchar(128) ,

gender tinyint default 0 ,

age integer ,

visit_at timestamp ,

operate_at timestamp ,

created_at timestamp not null ,

updated_at timestamp not null

CONSTRAINT PK PRIMARY KEY (inc_id, patient_code, created_at, updated_at)

);

phoenix的表格默认为全局索引,在本docker镜像中已经配置了对应的耳机索引,可通过下方命令创建索引:

0: jdbc:phoenix:> create index op_index on visit_details(operate_at);

No rows affected (5.957 seconds)

通过!table命令查看表格以及索引:

0: jdbc:phoenix:> !table

+------------+--------------+----------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+---------------+-----------------+------------+-------------+----------------+--+

| TABLE_CAT | TABLE_SCHEM | TABLE_NAME | TABLE_TYPE | REMARKS | TYPE_NAME | SELF_REFERENCING_COL_NAME | REF_GENERATION | INDEX_STATE | IMMUTABLE_ROWS | SALT_BUCKETS | MULTI_TENANT | VIEW_STATEMENT | VIEW_TYPE | INDEX_TYPE | TRANSACTIONAL | |

+------------+--------------+----------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+---------------+-----------------+------------+-------------+----------------+--+

| | | OP_INDEX | INDEX | | | | | ACTIVE | false | null | false | | | GLOBAL | false | |

| | SYSTEM | CATALOG | SYSTEM TABLE | | | | | | false | null | false | | | | false | |

| | SYSTEM | FUNCTION | SYSTEM TABLE | | | | | | false | null | false | | | | false | |

| | SYSTEM | LOG | SYSTEM TABLE | | | | | | true | 32 | false | | | | false | |

| | SYSTEM | SEQUENCE | SYSTEM TABLE | | | | | | false | null | false | | | | false | |

| | SYSTEM | STATS | SYSTEM TABLE | | | | | | false | null | false | | | | false | |

| | | VISIT_DETAILS | TABLE | | | | | | false | null | false | | | | false | |

+------------+--------------+----------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+---------------+-----------------+------------+-------------+----------------+--+

接下来使用dataX将MySQL数据导入phoenix,接下来使用dataX将MySQL数据导入phoenix,phoenix的使用注意事项,可以查看:使用DATAX将MySQL导入Phoenix